Unified Auditing Housekeeping

Introduction Data is the new currency. It is one of the most valuable organizational assets, however, if that data is not well protected, it can ... Read More

Learn more about why Eclipsys has been certified as a Great Place to Work in Canada, Best Workplaces in Ontario and Technology, and named Canada’s Top SME Employer for 3 years!

Learn more!

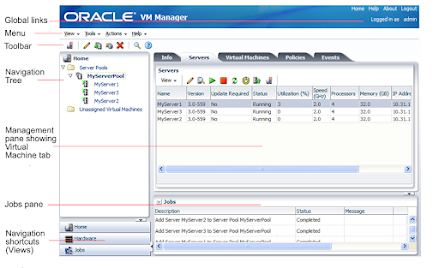

It has been ages since when Oracle released its own hypervisor (OVM). OVM technology is based on paravirtualization and uses Xen-based hypervisor. OVM’s latest release version 3.4.6.3 is the latest one available. Oracle announces extended support for OVM and the support period is March 2021 and will end on March 31, 2024.

Oracle’s next virtualization release is based on KVM, combined with OLVM (Oracle Linux Virtualization Manager).

I have published the Oracle documentation for OLVM: https://docs.oracle.com/en/virtualization/oracle-linux-virtualization-manager.

There are customers still using OVM. This is the right time to plan their journey on OLVM.

In this article, I will elaborate on issues we faced when we tried to map repositories to cluster node02.

We faced a new issue with the OVM cluster environment. This was caused due to sudden data center power outage. Once everything was online we could not start the OVM hypervisor ovs-agent services. The only option left was to perform a complete reinstallation of the node.

When I tried to add node backup to the cluster we faced an issue with mounting the repositories. The next option was to remove the nodes from the cluster again, This action was performed via GUI.

In my previous blog: OVM – Remove Stale Cluster Entries. I was able to fix this stale entry issue from the OVM hypervisor side.

But the issue was not resolved When we tried to present repositories to node02 got below mentioned error message.

OVMRU_002036E OVM-Repo2 - Cannot present the Repository to server: calavsovm02. The server needs to be in a cluster. [Thu Jun 22 10:25:34 EDT 2023]

We could mount the repositories manually to test, These repositories should mount automatically when a node is added to the cluster.

But the GUI shows node will be in the cluster. But repositories will not be visible on node02.

If the node is part of the cluster, I would recommend removing the node from the cluster before making any changes. In this scenario, node addition to the cluster is not moving after the below mount. This log is from ovs agent.

"DEBUG (ocfs2:182) cluster debug: {'/sys/kernel/debug/o2dlm': [],

'/sys/kernel/debug/o2net': ['connected_nodes', 'stats', 'sock_containers', 'send_tracking'],

'/sys/kernel/debug/o2hb': ['0004FB0000050000B705B4397850AAD6', 'failed_regions', 'quorum_regions', 'live_regions', 'livenodes'],

'service o2cb status': 'Driver for "configfs": Loaded\nFilesystem "configfs": Mounted\nStack glue driver: Loaded\nStack plugin "o2cb":

Loaded\nDriver for "ocfs2_dlmfs": Loaded\nFilesystem "ocfs2_dlmfs":

Mounted\nChecking O2CB cluster "f6f6b47b38e288e0": Online\n Heartbeat dead threshold: 61\n Network idle timeout: 60000\n Network keepalive delay: 2000\n Network reconnect delay: 2000\n Heartbeat mode: Global\nChecking O2CB heartbeat: Active\n 0004FB0000050000B705B4397850AAD6 /dev/dm-2\nNodes in O2CB cluster: 0 1 \nDebug file system at /sys/kernel/debug: mounted\n'}

[2023-06-22 11:10:25 12640] DEBUG (ocfs2:258) Trying to mount /dev/mapper/36861a6fddaa0481ec0dd3584514a8d62 to /poolfsmnt/0004fb0000050000b705b4397850aad6 "

Jun 27 12:55:32 calavsovm02 kernel: [ 659.079952] o2net: Connection to node calavsovm01 (num 0) at 10.110.110.101:7777 shutdown, state 7

Jun 27 12:55:34 calavsovm02 kernel: [ 661.080005] o2net: Connection to node calavsovm01 (num 0) at 10.110.110.101:7777 shutdown, state 7

Jun 27 12:55:36 calavsovm02 kernel: [ 663.079916] o2net: Connection to node calavsovm01 (num 0) at 10.110.110.101:7777 shutdown, state 7

Jun 27 12:55:38 calavsovm02 kernel: [ 665.080167] o2net: Connection to node calavsovm01 (num 0) at 10.110.110.101:7777 shutdown, state 7

Jun 27 12:55:40 calavsovm02 kernel: [ 667.079905] o2net: No connection established with node 0 after 60.0 seconds, check network and cluster configuration.

First, validate from both nodes’ storage visible. This can be validated by running multipath -ll command.

[root@ovs-node01 ~]# multipath -ll

36861a6fddaa0481ec0dd3584514a8d62 dm-0 EQLOGIC,100E-00

size=16G features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 11:0:0:0 sdc 8:32 active ready running

36861a6fddaa0787dbeddf57e514abd8a dm-1 EQLOGIC,100E-00

size=3.0T features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 10:0:0:0 sdb 8:16 active ready running

36861a6fddaa0d8306edd157b4d4aed23 dm-2 EQLOGIC,100E-00

size=2.0T features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 9:0:0:0 sdd 8:48 active ready running

[root@ovs-node02 ~]# multipath -ll

36861a6fddaa0481ec0dd3584514a8d62 dm-1 EQLOGIC,100E-00

size=16G features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 12:0:0:0 sde 8:64 active ready running

36861a6fddaa0787dbeddf57e514abd8a dm-2 EQLOGIC,100E-00

size=3.0T features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 11:0:0:0 sdd 8:48 active ready running

36861a6fddaa0d8306edd157b4d4aed23 dm-0 EQLOGIC,100E-00

size=2.0T features='1 queue_if_no_path' hwhandler='0' wp=rw

`-+- policy='round-robin 0' prio=1 status=active

`- 10:0:0:0 sdc 8:32 active ready running

[root@calavsovm02 oswatcher]#

In this architecture, a storage connection is established via bond1. both servers are configured to use jumbo frames which 9000

[root@ovs-node02 network-scripts]# cat ifcfg-bond1

DEVICE=bond1

BONDING_OPTS="mode=6 miimon=250 use_carrier=1 updelay=500 downdelay=500 primary_reselect=2 primary=eth1"

BOOTPROTO=static

IPADDR=*.*.*.*

NETMASK=*.*.*.*

ONBOOT=yes

MTU=9000

-- ifcfg-eth1

[root@ovs-node02 network-scripts]# cat ifcfg-eth1

DEVICE="eth1"

BOOTPROTO="none"

DHCP_HOSTNAME="ovs-node02"

HWADDR="*.*.*.*"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

UUID="36f65c92-3ad9-487b-b9ab-5bd792372d37"

MASTER=bond1

SLAVE=yes

MTU=9000

-- ifcfg-eth2

[root@ovs-node02 network-scripts]# cat ifcfg-eth2

DEVICE="eth2"

BOOTPROTO="none"

DHCP_HOSTNAME="ovs-node02"

HWADDR="*.*.*.*"

NM_CONTROLLED="yes"

ONBOOT="yes"

TYPE="Ethernet"

UUID="44589639-3b05-453b-9dc5-1b1a3b4d4c4d"

MASTER=bond1

SLAVE=yes

MTU=9000

As per our above-mentioned logs, we observed that there is a problem with the network connectivity, We thought of validating bond1 with 8000 packets and 1000 packets.

As per the below mentioned for 8000 packets there is no output. But we have output for 1000 packets. This concludes some configuration mismatch is there on the switch side.

Test 8000 Packet

[root@ovs-node02 ~]# ping -s 8000 -M do 10.110.210.201

PING 10.110.210.201 (10.110.210.201) 8000(8028) bytes of data.

Test 1000 Packet

[root@ovs-node02 ~]# ping -s 1000 -M do 10.110.210.201

PING 10.110.210.201 10.110.210.201) 1000(1028) bytes of data.

1008 bytes from 10.110.210.201: icmp_seq=1 ttl=64 time=0.183 ms

1008 bytes from 10.110.210.201: icmp_seq=2 ttl=64 time=0.194 ms

In the initial stage, we try to add the node to the cluster it’s sending the 9000 packet to the storage network on node01. In this scenario, jumbo frames are not working as expected. So automatic storage mounting is not working.

The solution is to change the network MTU size on node02 to 1500 and restart the network service. On the safe side, you can reboot node02.

[root@ovs-node02 network-scripts]# cat ifcfg-bond1

DEVICE=bond1

BONDING_OPTS="mode=6 miimon=250 use_carrier=1 updelay=500 downdelay=500 primary_reselect=2 primary=eth1"

BOOTPROTO=static

IPADDR=*.*.*.*

NETMASK=*.*.*.*

ONBOOT=yes

MTU=1500

[root@ovs-node02 network-scripts]#

Now try to add node 02 to the cluster again. This solution worked for our environment, now try to map other repositories to Node02.

These issues are complex and we need to spend more time to understand the issue. I would recommend creating a service request with Oracle before making any changes.

Carefully looks at the logs ovs-agent and var-log-message to understand the issue. Also, I would suggest executing a sosreport of the problematic node.

Introduction Data is the new currency. It is one of the most valuable organizational assets, however, if that data is not well protected, it can ... Read More

Introduction VMware vSphere has long held the crown as the leading on-premises server virtualization solution across businesses of all sizes. Its ... Read More