OCI FortiGate HA Cluster – Reference Architecture: Code Review and Fixes

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Découvrez pourquoi Eclipsys a été nommée 2023 Best Workplaces in Technology, Great Place to Work® Canada et Canada's Top 100 SME !

En savoir plus !

Zero Downtime Migration (ZDM) is the ultimate solution to migrate your Oracle database to Oracle Cloud. I recently started using it quite a lot. On-Prem to Exadata at Customer migrations. In my last blog post, I already shared tips about a ZDM installation error related to MySQL. This time, I’ll describe why my environment was crashing the ZDM service every time an eval command was run and provide the fix. This is not a bug but just an unexpected behavior due to a not-so-clean VM host.

Acknowledgment

I’d like to thank the ZDM Dev Team that chimed in to tackle this tough one after I opened an SR. It was never heard of, which is why I decided to write about it.

ZDM: 21.3 Build

OS: Oracle Linux 8.4 kernel 5.4.17-2102.201.3.el8uek.x86_64

After the installation, I just made sure that the connectivity was all set between:

ZDM-Source/Target system (ssh/SQLNET)

Prepare a responsefile for a Physical online migration with the required parameters to reproduce the behavior.

The parameters themselves are not important in our case

Responsefile

$ cat physical_online_demo.rsp | grep -v ^#

TGT_DB_UNIQUE_NAME=TGTCDB

MIGRATION_METHOD=ONLINE_PHYSICAL

DATA_TRANSFER_MEDIUM=DIRECT

PLATFORM_TYPE=EXACC

..More

ZDM Service Started:

$ zdmservice status

---------------------------------------

Service Status

---------------------------------------

Running: true

Tranferport:

Conn String: jdbc:mysql://localhost:8897/

RMI port: 8895

HTTP port: 8896

Wallet path: /u01/app/oracle/zdmbase/crsdata/velzdm2prm/security

Run ZDMCLI Listphases

$ZDM_HOME/bin/zdmcli migrate database –sourcedb SRCDB \ -sourcenode srcHost -srcauth zdmauth \ -srcarg1 user:zdmuser \ -targetnode tgtNode \ -tgtauth zdmauth \ -tgtarg1 user:opc \ -rsp ./physical_online_demo.rsp -listphases

zdmhostname: 2022-08-30T19:15:00.499Z : Processing response file ...

pause and resume capable phases for this operation: "

ZDM_GET_SRC_INFO

ZDM_GET_TGT_INFO

ZDM_PRECHECKS_SRC

ZDM_PRECHECKS_TGT

ZDM_SETUP_SRC

ZDM_SETUP_TGT

ZDM_PREUSERACTIONS

ZDM_PREUSERACTIONS_TGT

ZDM_VALIDATE_SRC

ZDM_VALIDATE_TGT

ZDM_DISCOVER_SRC

ZDM_COPYFILES

ZDM_PREPARE_TGT

ZDM_SETUP_TDE_TGT

ZDM_RESTORE_TGT

ZDM_RECOVER_TGT

ZDM_FINALIZE_TGT

ZDM_CONFIGURE_DG_SRC

ZDM_SWITCHOVER_SRC

ZDM_SWITCHOVER_TGT

ZDM_POST_DATABASE_OPEN_TGT

ZDM_DATAPATCH_TGT

ZDM_NONCDBTOPDB_PRECHECK

ZDM_NONCDBTOPDB_CONVERSION

ZDM_POST_MIGRATE_TGT

ZDM_POSTUSERACTIONS

ZDM_POSTUSERACTIONS_TGT

ZDM_CLEANUP_SRC

ZDM_CLEANUP_TGT"

Run ZDMCLI Eval Command

$ZDM_HOME/bin/zdmcli migrate database –sourcedb SRCDB \ -sourcenode srcHost -srcauth zdmauth \ -srcarg1 user:zdmuser \ -targetnode tgtNode \ -tgtauth zdmauth \ -tgtarg1 user:opc \ -rsp ./physical_online_demo.rsp –eval Enter source database SRCDB SYS password: zdmhostname: 2022-08-30T20:15:00.499Z : Processing response file ... Operation "zdmcli migrate database" scheduled with the job ID "1".

Error:

The eval command ends up crashing the service as soon as the execution kicks in.

Querying Job Status

$ zdmcli query job -jobid 1

PRGT-1038: ZDM service is not running.

Failed to retrieve RMIServer stub: javax.naming.ServiceUnavailableException

[Root exception is java.rmi.ConnectException: Connection refused to host:zdmhost;

nested exception is: java.net.ConnectException: Connection refused (Connection refused)]

ZDMService Status: down

$ zdmservice status | grep Running

Running: false

Trace the ZDM Service

Many things were tried to investigate where this behavior came from amongst which tracing ZDM Service

export SRVM_TRACE=TRUE

export GHCTL_TRACEFILE=$ZDMBASE/srvm.trc

$ZDMHOME/bin/zdmservice stop

$ZDMHOME/bin/zdmservice start

--> Re-Run the Eval Command

No luck, every time the service restarted it would crash again before I had the time to run another eval

Upgrade/Reinstall ZDM

I also tried an upgrade to the last build and then a full reinstall but ZDM still crashed

$ /zdminstall.sh update oraclehome=$ZDM_HOME ziploc=./NewBuild/zdm_home.zip

The previous job being still in the queue when restarting the zdmservice, I didn’t need to run anything to crash ZDM

Logs to check in ZDM

Anytime you open an SR due to ZDM issues, the common location to fetch logs is ZDM_BASE using the below cmd

$ find . -iregex '.*\.\(log.*\|err\|out\|trc\)$' -exec tar -rvf out.tar {} \;

This was hard to uncover considering the issue was never encountered in the past but if we look down the ZDM log.

$ view $ZDM_BASE/crsdata/`hostname`/rhp/zdmserver.log.0

… [DEBUG] [HASContext.<init>:129] moduleInit = 7

[DEBUG] [SRVMContext.init:224] Performing SRVM Context init. Init Counter=1

[DEBUG] [Version.isPre:804] version to be checked 21.0.0.0.0 major version to

check against 10

[DEBUG] [Version.isPre:815] isPre.java: Returning FALSE

[DEBUG] [OCR.loadLibrary:339] 17999 Inside constructor of OCR

[DEBUG] [SRVMContext.init:224] Performing SRVM Context init. Init Counter=2

[DEBUG] [OCR.isCluster:1061] Calling OCRNative for isCluster()

[CRITICAL] [OCRNative.Native] JNI: clsugetconf retValue = 5

[CRITICAL] [OCRNative.Native] JNI: clsugetconf failed with error code = 5

[DEBUG] [OCR.isCluster:1065] OCR Result status = false

[DEBUG] [Cluster.isCluster:xx] Failed to detect cluster: JNI: clsugetconf failed

We can see that some OCR checks were failing and a mismatch seem to have caused the failure but why?

ZDM software (without delving into details) has bits of grid infrastructure core embedded within.

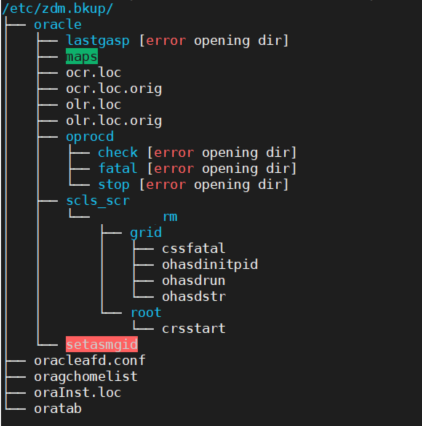

The ZDM VM had an oratab and a bunch of other ocr files under /etc/oracle that was used to perform the CRS version check(ocr.loc). Which in turn messed up with ZDM service as CRS couldn’t be detected.

The VM (Virtual Machine) had leftovers from an old DB (Database) and grid environment that weren’t cleaned.

$ cat /etc/oracle/oratab

+ASM:/u01/app/19.0.0/grid:N

CDB1:/u01/app/oracle/product/19.0.0/dbhome_1:W

We first moved the files out of /etc/oracle and the eval command worked without crashing the ZDM service.

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Introduction So far, I have used Oracle AutoUpgrade, many times in 3 different OS’. Yet the more you think you’ve seen it all and reached the ... Read More