OLVM: Upgrade from 4.4 to 4.5

Introduction VMware vSphere has long held the crown as the leading on-premises server virtualization solution across businesses of all sizes. Its ... Read More

Découvrez pourquoi Eclipsys a été nommée 2023 Best Workplaces in Technology, Great Place to Work® Canada et Canada's Top 100 SME !

En savoir plus !

Virtualization made a significant change in the IT (Information Technology) industry. This technology helped many organizations to use server resources efficiently. Even though cloud technology is emerging some companies are not ready to move their workloads to the cloud due to data sensitivity and business obstacles. So the only option to save the IT infrastructure cost using virtualization technology.

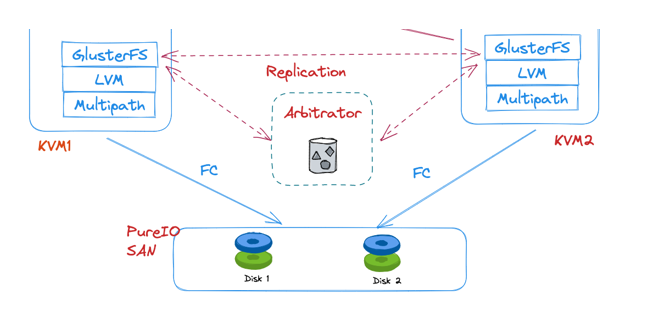

For small and medium-scale companies IT budgets are really tight. Due to a limited budget, It’s a challenging job to achieve storage systems stability with virtualization. When organizations plan on virtualization optimal architecture needs 3 nodes; 3-node architecture gives proper fencing and high availability. Oracle Corporation combined with open source virtualization and introduced (OLVM) Oracle Linux Virtualization Manager. For OLVM there is no restriction to having 2 node architectures. When considering the storage availability there are three ways to archive this using OLVM.

GlusterFS and ISCSI it’s a must to have a 10g back-end network for management. GlusterFS Storage replication is happening via the management network.

Two-node architecture storage stability can be archived by implementing the GlusterFS arbitrated replicated volumes. This mainly addresses avoiding storage split-brain conditions.

In this article, I would like to highlight the implementation steps of the Gluster Storage Arbitrator.

GlusterFS Arbitrator Implementation prerequisites.

Split-brains in replica volumes

When a file is in split-brain, there is an inconsistency in either data or metadata (permissions, uid/gid, extended attributes, etc.) of the file amongst the bricks of a replica. We do not have enough information to authoritatively pick a copy as being pristine and healing to the bad copies, despite all bricks being up and online. For directories, there is also an entry-split brain where a file inside it has different gfids/ file-type (say one is a file and another is a directory of the same name) across the bricks of a replica.

The arbiter volume is a special subset of replica volumes that is aimed at preventing split brains and providing the same consistency guarantees as a normal replica 3 volume without consuming 3x space.

If you need to read and understand the get the complete picture, try the below-mentioned link: https://docs.gluster.org/en/v3/Administrator%20Guide/arbiter-volumes-and-quorum/

Redhat: https://access.redhat.com/documentation/en-us/red_hat_gluster_storage/3.3/html/administration_guide/creating_arbitrated_replicated_volumes

Arbitrators only store only the metadata of the files stored in the bricks. When the replicated main to disk is 1TB you need only 2MB of space from the arbitrator side to store metadata.

minimum arbiter brick size = 4 KB * ( size in KB of largest data brick in volume or replica set / average file size in KB)

minimum arbiter brick size = 4 KB * ( 1 TB / 2 GB )

= 4 KB * ( 1000000000 KB / 2000000 KB )

= 4 KB * 500 KB

= 2000 KB

= 2 MB

For this example, we are going to host the arbitrator disk in the OLVM engine server

Partition the disk using fdisk and create LVM, Our other GlusterFS bricks are hosted as LVMs. I would recommend keeping the brick and arbitrator disk identical.

[root@olvm-engine-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─ol-root 252:0 0 44G 0 lvm /

└─ol-swap 252:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 250G 0 disk

sdc 8:32 0 100G 0 disk

sdd 8:48 0 100G 0 disk

sr0 11:0 1 1024M 0 rom

[root@olvm-engine-01 ~]# fdisk /dev/sdc

Execute lsblk to get the disks layout

[root@olvm-engine-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─ol-root 252:0 0 44G 0 lvm /

└─ol-swap 252:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 250G 0 disk

sdc 8:32 0 100G 0 disk

└─sdc1 8:33 0 100G 0 part

sdd 8:48 0 100G 0 disk

sr0 11:0 1 1024M 0 rom

Setup LVM for arbitrator disk

[root@olvm-engine-01 ~]# pvcreate /dev/sdc1

Physical volume "/dev/sdc1" successfully created.

[root@olvm-engine-01 ~]# pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 ol lvm2 a-- <49 ---="" -l="" -n="" -wi-a-----="" -wi-ao----="" .00g="" 0="" 5.00g="" 90.00g="" 90g="" attr="" code="" convert="" cpy="" created.="" created="" data="" dev="" g="" gfs_dev_lv="" gfs_dev_vg="" group="" log="" logical="" lsize="" lv="" lvcreate="" lvm2="" lvs="" meta="" move="" ol="" olvm-engine-01="" origin="" pool="" root="" sdc1="" successfully="" swap="" vg="" vgcreate="" volume="" ync="">Create xfs file system on the arbitrator disk and mount this as a persistent mount point.

[root@olvm-engine-01 ~]# mkfs.xfs -f -i size=512 -L glusterfs /dev/mapper/GFS_DEV_VG-GFS_DEV_LV

meta-data=/dev/mapper/GFS_DEV_VG-GFS_DEV_LV isize=512 agcount=4, agsize=5898240 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=1, sparse=1, rmapbt=0

= reflink=1

data = bsize=4096 blocks=23592960, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0, ftype=1

log =internal log bsize=4096 blocks=11520, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

Discarding blocks...Done.

[root@olvm-engine-01 ~]#

Execute lsblk to validate the partition disk

[root@olvm-engine-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 50G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 49G 0 part

├─ol-root 252:0 0 44G 0 lvm /

└─ol-swap 252:1 0 5G 0 lvm [SWAP]

sdb 8:16 0 250G 0 disk

sdc 8:32 0 100G 0 disk

└─sdc1 8:33 0 100G 0 part

└─GFS_DEV_VG-GFS_DEV_LV 252:2 0 90G 0 lvm /nodirectwritedata/glusterfs/dev_arb_brick_03

sdd 8:48 0 100G 0 disk

└─sdd1 8:49 0 100G 0 part

sr0 11:0 1 1024M 0 rom

[root@olvm-engine-01 ~]#

Now discover the arbitrator disk from KVMs.

gluster peer probe olvm-engine-01.oracle.ca -- Execute on both KVMs

Expected output after peering

[root@KVM01 ~]# gluster peer probe olvm-engine-01.oracle.ca

peer probe: success

[root@KVM02 ~]# gluster peer probe olvm-engine-01.oracle.ca

peer probe: Host olvm-engine-01.oracle.ca port 24007 already in peer list

[root@KVM01 ~]# gluster volume add-brick gvol0 replica 3 arbiter 1 olvm-engine-01.oracle.ca:/nodirectwritedata/glusterfs/arb_brick3/gvol0

volume add-brick: success

[root@KVM120 ~]# gluster volume info dev_gvol0

Volume Name: dev_gvol0

Type: Replicate

Volume ID: db1a8a7e-6709-4a1c-8839-ff0ab3cc4ebe

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x (2 + 1) = 3

Transport-type: tcp

Bricks:

Brick1: KVM120:/nodirectwritedata/glusterfs/dev_brick_01/dev_gvol0

Brick2: KVM121:/nodirectwritedata/glusterfs/dev_brick_02/dev_gvol0

Brick3: sofe-olvm-01.sofe.ca:/nodirectwritedata/glusterfs/dev_arb_brick_03/dev_gvol0 (arbiter)

Options Reconfigured:

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

cluster.data-self-heal: on

cluster.metadata-self-heal: on

cluster.entry-self-heal: on

cluster.favorite-child-policy: mtime

OLVM two-node architecture with GlusterFS data domains has a high chance to have this split-brain issues. split-brain issues can be completely avoided by implementing arbitrated replicated volumes.

The important factor is you do not need a huge disk to implement this arbitrator disk. But remember OLVM management network should be 10g to support the brick replication. Whatever changes happening on the storage level to replicate via the management network.

Introduction VMware vSphere has long held the crown as the leading on-premises server virtualization solution across businesses of all sizes. Its ... Read More

Introduction Monitoring plays a major part in mission-critical environments. Most businesses depend on IT infrastructure. As the ... Read More