OLVM: Upgrade from 4.4 to 4.5

Introduction VMware vSphere has long held the crown as the leading on-premises server virtualization solution across businesses of all sizes. Its ... Read More

Découvrez pourquoi Eclipsys a été nommée 2023 Best Workplaces in Technology, Great Place to Work® Canada et Canada's Top 100 SME !

En savoir plus !

We are in the cloud era and many organizations are absorbing cloud technologies to get a competitive advantage over others. The cloud market is vast, with a diverse selection of services. It’s not easy to select a suitable cloud platform for an organization.

Oracle Cloud Platform is the number one platform to host Oracle Database Workloads. They offer many service database services types like Exadata at cloud, Exadata Cloud@Customer, and Autonomous Database and the list continues.

Oracle database service is a really efficient and effective way to host databases. Oracle has automated this service to make deployment easy and simple.

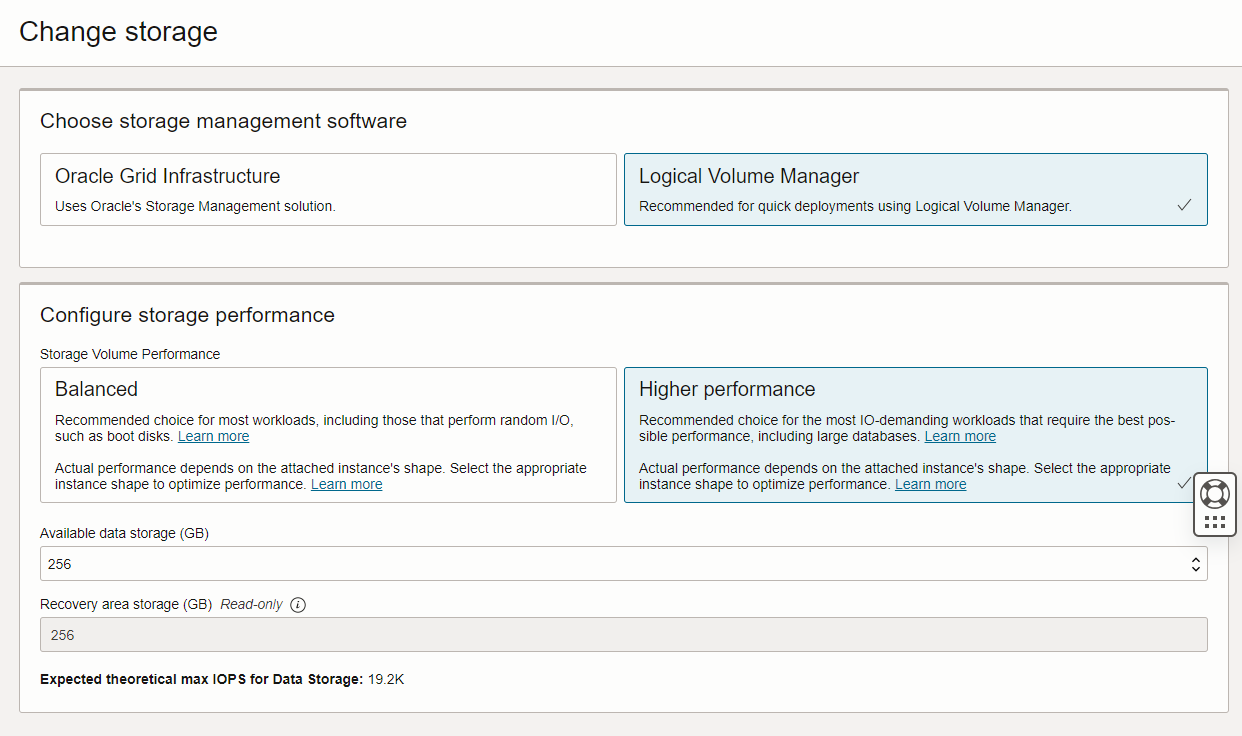

Database Service offers two types of storage management software.

In this article, I will elaborate on database service issues encountered after creating LVM-based storage architecture.

Once the VM was created and up for the first time mount points were there as expected. After we rebooted the database server mount points got disappeared.

This output of the (df -h) after a server reboot. Below mentioned output we cannot see the Oracle data and Oracle binary mount point.

[root@local-db-01 ~]# df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 7.7G 0 7.7G 0% /dev

tmpfs 7.7G 0 7.7G 0% /dev/shm

tmpfs 7.7G 17M 7.7G 1% /run

tmpfs 7.7G 0 7.7G 0% /sys/fs/cgroup

/dev/mapper/vg00-root 9.6G 2.8G 6.3G 31% /

/dev/sda2 488M 143M 311M 32% /boot

/dev/mapper/vg00-home 960M 3.4M 890M 1% /home

/dev/sda1 128M 7.5M 121M 6% /boot/efi

/dev/mapper/vg00-opt 34G 3.2G 30G 10% /opt

/dev/mapper/vg00-var 9.6G 1.6G 7.6G 17% /var

/dev/mapper/vg00-var_log 3.8G 29M 3.6G 1% /var/log

/dev/mapper/vg00-var_log_audit 1.9G 13M 1.8G 1% /var/log/audit

tmpfs 7.7G 124K 7.7G 1% /tmp

tmpfs 7.7G 0 7.7G 0% /var/tmp

To get a clear understanding of disk layout execute lsblk to verify the disks. We observed that disks are there in the server.

[root@local-db-01 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sdf 8:80 0 128G 0 disk

sdd 8:48 0 200G 0 disk

sdb 8:16 0 128G 0 disk

sdg 8:96 0 128G 0 disk

sde 8:64 0 128G 0 disk

sdc 8:32 0 128G 0 disk

sda 8:0 0 75G 0 disk

├─sda4 8:4 0 25.4G 0 part

│ ├─vg00-opt 252:4 0 35G 0 lvm /opt

│ └─vg00-var 252:3 0 10G 0 lvm /var

├─sda2 8:2 0 512M 0 part /boot

├─sda3 8:3 0 49G 0 part

│ ├─vg00-var_log 252:1 0 4G 0 lvm /var/log

│ ├─vg00-root 252:6 0 10G 0 lvm /

│ ├─vg00-opt 252:4 0 35G 0 lvm /opt

│ ├─vg00-var_tmp 252:2 0 1G 0 lvm

│ ├─vg00-var_log_audit 252:0 0 2G 0 lvm /var/log/audit

│ ├─vg00-swap 252:7 0 10G 0 lvm [SWAP]

│ ├─vg00-home 252:5 0 1G 0 lvm /home

│ └─vg00-var 252:3 0 10G 0 lvm /var

└─sda1 8:1 0 128M 0 part /boot/efi

sdh 8:112 0 128G 0 disk

To get more clarity execute ls -l /dev/mapper to validate the disks are there. Here we observed that lvm’s are missing from the server.

[root@local-db-01 ~]# ls -l /dev/mapper/

total 0

crw------- 1 root root 10, 236 Jul 24 09:19 control

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-home -> ../dm-5

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-opt -> ../dm-4

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-root -> ../dm-6

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-swap -> ../dm-7

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-var -> ../dm-3

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-var_log -> ../dm-1

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-var_log_audit -> ../dm-0

lrwxrwxrwx 1 root root 7 Jul 24 09:19 vg00-var_tmp -> ../dm-2

These LVM events are recorded in /var/log/messages. Looking at /var/log/messages helps us to get an understanding of the issue.

2023-07-24T09:12:26.985875-04:00 local-db-01 systemd: lvm2-lvmetad.socket failed to listen on sockets: No data available2023-07-24T09:12:26.985989-04:00 local-db-01 systemd: Failed to listen on LVM2 metadata daemon socket.2023-07-24T09:12:26.986117-04:00 local-db-01 systemd: Dependency failed for LVM2 PV scan on device 8:3.2023-07-24T09:12:26.986233-04:00 local-db-01 systemd: Job lvm2-pvscan@8:3.service/start failed with result 'dependency'.2023-07-24T09:12:26.986351-04:00 local-db-01 systemd: Dependency failed for LVM2 PV scan on device 8:4.2023-07-24T09:12:26.986485-04:00 local-db-01 systemd: Job lvm2-pvscan@8:4.service/start failed with result 'dependency'.2023-07-24T09:12:26.986604-04:00 local-db-01 systemd: Started D-Bus System Message Bus.2023-07-24T09:12:27.019241-04:00 local-db-01 dbus-daemon: Failed to start message bus: Failed to open "/etc/selinux/minimum/contexts/dbus_contexts": No such file or directory

As per var log messages, the last lines show the message for SELinux “2023-07-24T09:12:27.019241-04:00 local-db-01 dbus-daemon: Failed to start message bus: Failed to open “/etc/SELinux/minimum/contexts/dbus_contexts”: No such file or directory”

To have a clear understanding better to validate disk layout from the LVM level using pvs, vgs, and lvs commands.

[root@local-db-01 log]# pvs

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

PV VG Fmt Attr PSize PFree

/dev/sda3 vg00 lvm2 a-- <49 .00g="" 0="" 1.37g="" 25.37g="" a--="" bits_grp="" code="" data_grp="" dev="" g="" lvm2="" reco_grp="" sda4="" sdb="" sdc="" sdd="" sde="" sdf="" sdg="" sdh="" vg00="">

[root@local-db-01 log]# vgs

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

VG #PV #LV #SN Attr VSize VFree

BITS_GRP 1 1 0 wz--n- <200 .00g="" 0="" 1="" 255.99g="" 2="" 4="" 511.98g="" 74.37g="" 8="" code="" data_grp="" g="" reco_grp="" vg00="" wz--n-="">

[root@local-db-01 log]# lvs

WARNING: Failed to connect to lvmetad. Falling back to device scanning.

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

selabel_open failed: No such file or directory

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

BITS BITS_GRP -wi------- 200.00g

DATA DATA_GRP -wi------- 511.98g

RECO RECO_GRP -wi------- 255.99g

home vg00 -wi-ao---- 1.00g

opt vg00 -wi-ao---- 35.00g

root vg00 -wi-ao---- 10.00g

swap vg00 -wi-ao---- 10.00g

var vg00 -wi-ao---- 10.00g

var_log vg00 -wi-ao---- 4.00g

var_log_audit vg00 -wi-ao---- 2.00g

var_tmp vg00 -wi-a----- 1.00g

This concludes that it’s a problem with mounting the disk.

We found a meta link note Oracle Linux: Network Service Fails to Start While SELinux Is Enabled (Doc ID 2508103.1) that shows the exact issue caused by the SELINUXTYPE=minimum parameter

Change the SELinux SELINUXTYPE parameter to targeted.

[root@local-db-01 /]# cat /etc/sysconfig/selinux

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=permissive

# SELINUXTYPE= can take one of these two values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

After changing the SELinux Parameter, I would recommend restarting the VM. Once the VM is up validate the disk layout using df -h

[opc@local-dev-db01 ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

devtmpfs 7.7G 0 7.7G 0% /dev

tmpfs 7.7G 1.3M 7.7G 1% /dev/shm

tmpfs 7.7G 65M 7.7G 1% /run

tmpfs 7.7G 0 7.7G 0% /sys/fs/cgroup

/dev/mapper/vg00-root 9.6G 2.8G 6.3G 31% /

/dev/mapper/vg00-var 9.6G 1.8G 7.4G 20% /var

/dev/sda2 488M 143M 311M 32% /boot

/dev/mapper/vg00-home 960M 202M 692M 23% /home

/dev/mapper/vg00-var_log 3.8G 34M 3.6G 1% /var/log

/dev/mapper/vg00-var_log_audit 1.9G 43M 1.8G 3% /var/log/audit

/dev/mapper/vg00-opt 34G 6.1G 27G 19% /opt

/dev/sda1 128M 7.5M 121M 6% /boot/efi

tmpfs 7.7G 4.6M 7.7G 1% /var/tmp

tmpfs 7.7G 17M 7.7G 1% /tmp

/dev/mapper/RECO_GRP-RECO 251G 45G 194G 19% /u03

/dev/mapper/BITS_GRP-BITS 196G 23G 164G 13% /u01

/dev/mapper/DATA_GRP-DATA 503G 233G 245G 49% /u02

tmpfs 1.6G 0 1.6G 0% /run/user/101

tmpfs 1.6G 0 1.6G 0% /run/user/1000

[opc@local-dev-db01 ~]$ Introduction VMware vSphere has long held the crown as the leading on-premises server virtualization solution across businesses of all sizes. Its ... Read More

Introduction Monitoring plays a major part in mission-critical environments. Most businesses depend on IT infrastructure. As the ... Read More