Terraform Modules Simplified

Terraform is probably already the de-facto standard for cloud deployment. I use it on a daily basis deploying and destroying my tests and demo setups ... Read More

Learn more about why Eclipsys has been named the 2023 Best Workplaces in Technology and Ontario, Certified as a Great Place to Work in Canada and named Canada’s Top SME Employer!

Learn more!Not long ago, Oracle and Microsoft announced a new level of cooperation in the public cloud interlinking their clouds and providing the ability to use each of the clouds where they are the best. For example it allows you to run an application on Azure and use an Oracle database in the Oracle OCI. This was possible before for some regions but would involve multiple steps on both sides involving a 3rd party network provider to interlink Oracle FastConnect and Azure ExpressRoute. Now it can be done using Azure and Oracle OCI interfaces only. The option exists so far only for the US Washington DC area where you have OCI Ashburn and Azure Washington DC regions. I have tried it and found it to work but not without some surprises.

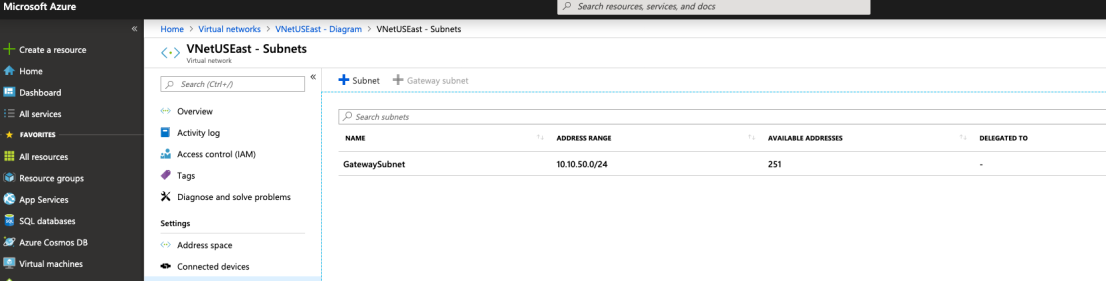

I started on Azure by creating a Vnet network and adding two subnets where one is a normal and another is a gateway subnet. We need the latter for the Virtual Network Gateway (VNG). Keep in mind that all resources should be created in the East US region.

The easiest way to create the VNG is to put the name of the resource type in the search string when you create a resource.

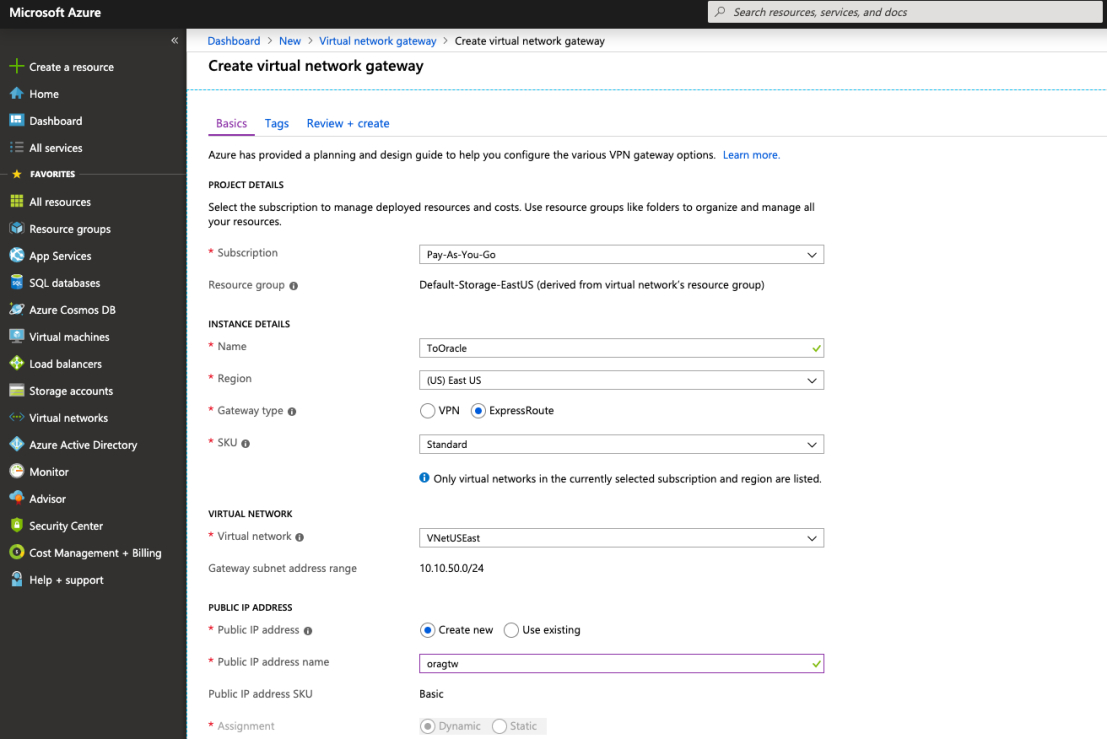

The creation of the gateway is quite straight forward. Don’t forget to use the “ExpressRoute” type and pick up the right network with the previously created gateway subnet.

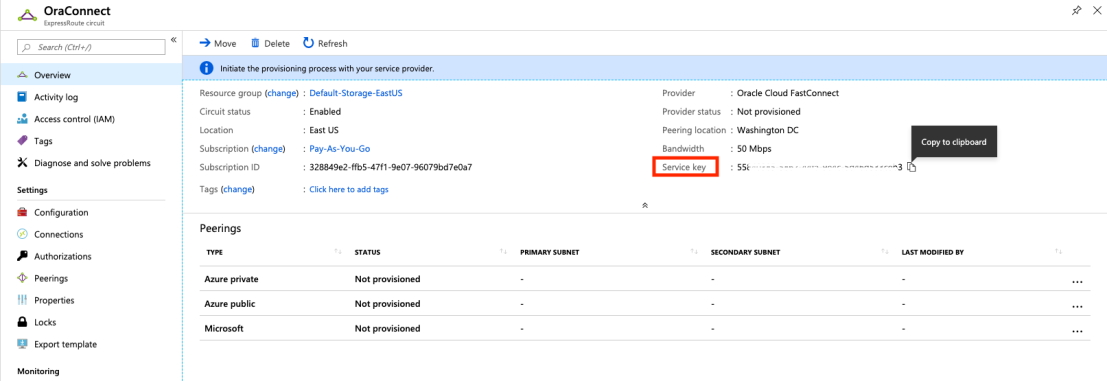

The next step is to add an ExpressRoute circuit to your network. During the creation you need to put your name for the ExpressRoute, choose “Oracle Cloud FastConnect” as a provider and put parameters for billing. I chose 50Mbps with SKU “Standard” and “Metered” billing model because it was the cheapest. It should be enough for the tests but for a heavy production workload with a lot of traffic another configuration could be more preferable.

If you look to the ExpressRoute circuit status you will see that the peering is not setup yet. We need to continue the setup on Oracle OCI side. There we will need the Service Key from the ExpressRoute circuit status page.

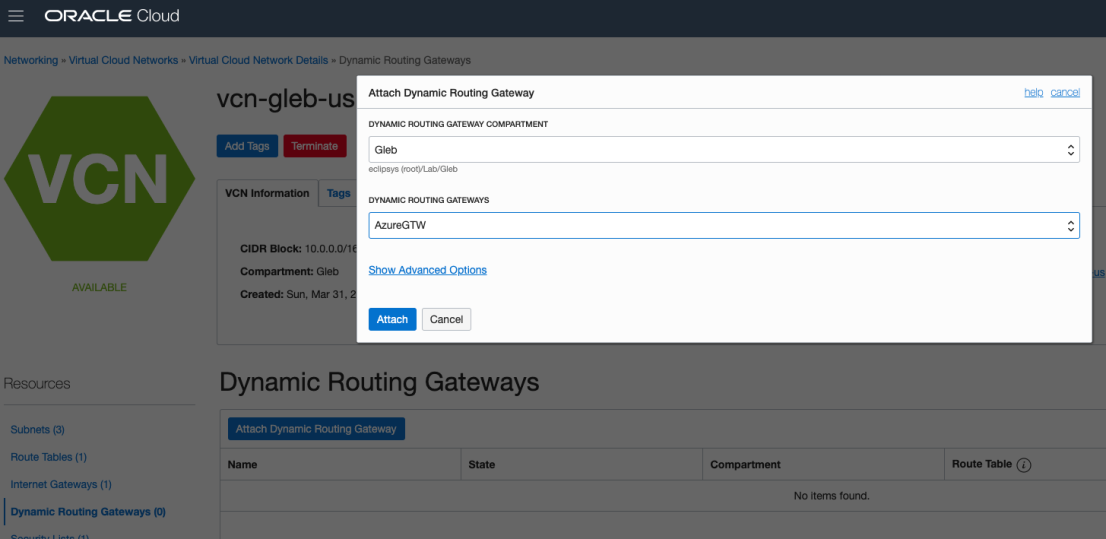

On the OCI side we have to prepare a VCN network with subnets and a Dynamic Routing Gateway (DRG) attached to the network and it has to be in the Ashburn region.

The DRG has to be added before the Oracle FastConnect.

After creating of the gateway we need to attach it to our network.

The next step is to create the FastConnect connection using the “Microsoft Azure: ExpressRoute” provider.

Providing our gateway, the service key we have copied from Azure ExpressRoute and the networks details for BGP addresses.

Soon after that the connection will be up and we will need to configure routing and security rules to access the network. My subnet on Azure had addresses in 10.10.40.0/24 network and I added route for my subnet on Oracle OCI.

On the Azure side we need to add a connection for our ExpressRoute circuit using our Virtual Network Gateway and the ExpressRoute circuit.

The rest of the steps are related to setting up security rules for the firewall on Azure and OCI VCN and adjusting firewall rules on the VM’s we want to connect to each other. For example we need to create route on OCI to direct network to the subnet on Azure. On Azure I have 10.10.40/24 subnet. So I need to create the route on OCI for that.

I had a Linux VM on the Azure side and another one on OCI. The connection worked pretty well and throughput was relatively the same to both sides but I got quite different results for latency.

The ping was roughly the same in both directions, but I noticed that from Azure side it was bit less stable in numbers.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

[opc@gleb-bastion-us ~]$ ping 10.10.40.4PING 10.10.40.4 (10.10.40.4) 56(84) bytes of data.64 bytes from 10.10.40.4: icmp_seq=1 ttl=62 time=2.01 ms64 bytes from 10.10.40.4: icmp_seq=2 ttl=62 time=2.09 ms[otochkin@us-east-lin-01 ~]$ ping 10.0.1.2PING 10.0.1.2 (10.0.1.2) 56(84) bytes of data.64 bytes from 10.0.1.2: icmp_seq=1 ttl=61 time=2.66 ms64 bytes from 10.0.1.2: icmp_seq=2 ttl=61 time=1.90 ms64 bytes from 10.0.1.2: icmp_seq=3 ttl=61 time=2.44 msA copy of a random file was quite fast too even I noticed some slowdown during the copy from OCI to Azure.[opc@gleb-bastion-us ~]$ scp new_random_file.out otochkin@10.10.40.4:~/Enter passphrase for key '/home/opc/.ssh/id_rsa':new_random_file.out 100% 1000MB 20.6MB/s 00:48[opc@gleb-bastion-us ~]$ scp new_random_file.out otochkin@10.10.40.4:~/Enter passphrase for key '/home/opc/.ssh/id_rsa':new_random_file.out 100% 1000MB 21.3MB/s 00:46[opc@gleb-bastion-us ~]$ scp new_random_file.out otochkin@10.10.40.4:~/Enter passphrase for key '/home/opc/.ssh/id_rsa':new_random_file.out 100% 1000MB 12.2MB/s 01:21[opc@gleb-bastion-us ~]$ |

I found the slowness was caused by IO performance on the Azure instance. It was not big enough to supply sufficient IO to the disk. So it was not network which was a bottleneck there.

I did some tests using iperf3 and the oracle network performance tool oratcptest (Measuring Network Capacity using oratcptest (Doc ID 2064368.1)) . At first, I found some significant difference in latency.

From OCI to Azure latency was about 19ms :

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

[opc@gleb-bastion-us ~]$ java -jar oratcptest.jar 10.10.40.4 -port=5555 -duration=10s -interval=2s[Requesting a test]Message payload = 1 MbytePayload content type = RANDOMDelay between messages = NONumber of connections = 1Socket send buffer = (system default)Transport mode = SYNCDisk write = NOStatistics interval = 2 secondsTest duration = 10 secondsTest frequency = NONetwork Timeout = NO(1 Mbyte = 1024x1024 bytes)(20:49:52) The server is ready.Throughput Latency(20:49:54) 51.633 Mbytes/s 19.368 ms(20:49:56) 53.480 Mbytes/s 18.699 ms(20:49:58) 52.959 Mbytes/s 18.883 ms(20:50:00) 53.115 Mbytes/s 18.827 ms(20:50:02) 53.355 Mbytes/s 18.743 ms(20:50:02) Test finished.Socket send buffer = 813312 bytesAvg. throughput = 52.883 Mbytes/sAvg. latency = 18.910 ms[opc@gleb-bastion-us ~]$ |

But for other direction connecting from Azure back to a sever on OCI we had latency about 85ms. It was 4.5 times slower.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

[azureopc@us-east-lin-01 ~]$ java -jar oratcptest.jar 10.0.1.2 -port=5555 -duration=10s -interval=2s[Requesting a test]Message payload = 1 MbytePayload content type = RANDOMDelay between messages = NONumber of connections = 1Socket send buffer = (system default)Transport mode = SYNCDisk write = NOStatistics interval = 2 secondsTest duration = 10 secondsTest frequency = NONetwork Timeout = NO(1 Mbyte = 1024x1024 bytes)(21:33:59) The server is ready.Throughput Latency(21:34:01) 11.847 Mbytes/s 84.411 ms(21:34:03) 11.736 Mbytes/s 85.206 ms(21:34:05) 11.690 Mbytes/s 85.546 ms(21:34:07) 11.699 Mbytes/s 85.477 ms(21:34:09) 11.737 Mbytes/s 85.205 ms(21:34:09) Test finished.Socket send buffer = 881920 bytesAvg. throughput = 11.732 Mbytes/sAvg. latency = 85.238 ms[azureopc@us-east-lin-01 ~]$ |

After digging in and troubleshooting I found the problem was in my instance on Azure site. It was too small and too slow to run the test with proper speed. After increasing the Azure instance size it showed the same latency.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

[azureopc@us-east-lin-01 ~]$ java -jar oratcptest.jar 10.0.1.2 -port=5555 -duration=10s -interval=2s[Requesting a test]Message payload = 1 MbytePayload content type = RANDOMDelay between messages = NONumber of connections = 1Socket send buffer = (system default)Transport mode = SYNCDisk write = NOStatistics interval = 2 secondsTest duration = 10 secondsTest frequency = NONetwork Timeout = NO(1 Mbyte = 1024x1024 bytes)(16:44:11) The server is ready.Throughput Latency(16:44:13) 59.449 Mbytes/s 16.821 ms(16:44:15) 59.127 Mbytes/s 16.913 ms(16:44:17) 59.367 Mbytes/s 16.845 ms(16:44:19) 59.015 Mbytes/s 16.945 ms(16:44:21) 59.136 Mbytes/s 16.910 ms(16:44:21) Test finished.Socket send buffer = 965120 bytesAvg. throughput = 59.199 Mbytes/sAvg. latency = 16.892 ms[azureopc@us-east-lin-01 ~]$ |

In summary, I can say that the process of setting up the hybrid cloud was easy and transparent enough, it showed good performance and I think it has great potential. I hope the option will be available in other regions soon and I am looking forward to it becoming available in the Toronto region.

Terraform is probably already the de-facto standard for cloud deployment. I use it on a daily basis deploying and destroying my tests and demo setups ... Read More

If you’ve been following the recent changes in the Linux world you probably remember how Red Hat and Centos announced in December 2020 that the ... Read More