Terraform Modules Simplified

Terraform is probably already the de-facto standard for cloud deployment. I use it on a daily basis deploying and destroying my tests and demo setups ... Read More

Learn more about why Eclipsys has been named the 2023 Best Workplaces in Technology and Ontario, Certified as a Great Place to Work in Canada and named Canada’s Top SME Employer!

Learn more!We’ve already discussed how to migrate databases from a standalone 12.2 database to a pluggable database (PDB) in a 19c container in the Oracle cloud. But what if the target container database (CDB) is already part of a Data Guard configuration and has several PDB in it? I will try to go through the main steps on how to do that without breaking the replication.

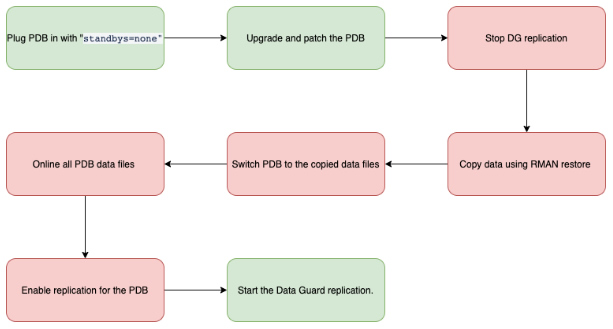

From the high level the process boiled down to several main steps. We plugged in the new database with the clause “standbys=none”. Then we did all our patching, upgrade and all necessary configuration changes. After that I could instantiate the data on the disaster recovery (DR) site in our standby PDB. The final steps made the new PDB on standby site to use the copied data files and enabled the replication.

Here is the high level flowchart for the process:

We started from adding the new PDB on the primary side. You might notice the clause “standbys=none” in the command.

testdb011> CREATE pluggable DATABASE testdg02 AS clone USING '/home/oracle/working/bcadmgo/pdbcdb2.xml' standbys=NONE; Pluggable DATABASE created. testdb011> SELECT name,open_mode,recovery_status FROM v$pdbs; NAME OPEN_MODE RECOVERY ------------------------------------------------------------ ---------- -------- PDB$SEED READ ONLY ENABLED TESTPDB1 READ ONLY ENABLED TESTDG01 READ ONLY ENABLED TESTDG02 MOUNTED DISABLED testdb011> ALTER pluggable DATABASE TESTDG02 OPEN upgrade; Pluggable DATABASE altered. testdb011> SHOW pdbs CON_ID CON_NAME OPEN MODE RESTRICTED --------------------- ------------------------------ ---------- ---------- 2.00 PDB$SEED READ ONLY NO 3.00 TESTPDB1 READ WRITE NO 4.00 TESTDG01 READ WRITE NO 5.00 TESTDG02 MIGRATE YES testdb011>

You can see in the output the database plugged in and in “MIGRATE” state on the primary side and the recovery state is “DISABLED” . What would we see on the DR site?

testdb011> SELECT name,open_mode,recovery_status FROM v$pdbs; NAME OPEN_MODE RECOVERY ------------------------------------------------------------ ---------- -------- PDB$SEED READ ONLY ENABLED TESTPDB1 READ ONLY ENABLED TESTDG01 READ ONLY ENABLED TESTDG02 MOUNTED DISABLED testdb011>

The state is “MOUNTED” and recovery is “DISABLED”. It was expected but what should it show for data files since nothing was really replicated. The alert log showed us that the PDB was plugged into the standby CDB with nonexistent datafile using name pattern UNNAMED*.

Recovery created pluggable database TESTDG02 TESTDG02(5):File #26 added to control file as 'UNNAMED00026'. Originally created as: TESTDG02(5):'+DATAC1/TESTDB01_MONCTON/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/system.555.1042217171' TESTDG02(5):because the pluggable database was created with nostandby TESTDG02(5):or the tablespace belonging to the pluggable database is TESTDG02(5):offline. TESTDG02(5):File #27 added to control file as 'UNNAMED00027'. Originally created as: TESTDG02(5):'+DATAC1/TESTDB01_MONCTON/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/sysaux.556.1042217171' TESTDG02(5):because the pluggable database was created with nostandby TESTDG02(5):or the tablespace belonging to the pluggable database is TESTDG02(5):offline. TESTDG02(5):File #28 added to control file as 'UNNAMED00028'. Originally created as: TESTDG02(5):'+DATAC1/TESTDB01_MONCTON/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/undotbs1.557.1042217171' TESTDG02(5):because the pluggable database was created with nostandby TESTDG02(5):or the tablespace belonging to the pluggable database is ...

After all patching and upgrade activities on the primary side which you could read in the previous post we were able to open the database. The next step was to make an actual copy of the PDB on the DR site. We used RMAN restore “from service” option. It copied all PDB data files from the source over the network.

Before running the script I recommend to make sure no archived logs are going to be deleted on primary and standby. You can just run the command on both sides to change the RMAN archivelog deletion policy. And monitor the FRA free size after running it.

RMAN> configure archivelog deletion policy to none;

And if you were using Transparent Data Encryption (TDE) you should have copied your updated wallet over to be able to read the encrypted data files. In the Oracle cloud it will be certainly the case.

The RMAN script is to copy the data was quite simple:

run{ set newname for pluggable database TESTDG02 to new; restore pluggable database TESTDG02 from service testdb01_moncton; }

After running the script we got the copy of all data files for our standby PDB. After doing that we should stop our replication. We used the Data Guard Broker and our command looked like that:

DGMGRL> show configuration Configuration - dgconf_testdb01 Protection Mode: MaxPerformance Members: testdb01_moncton - Primary database testdb01_halifax - Physical standby database Fast-Start Failover: Disabled Configuration Status: SUCCESS (status updated 105 seconds ago) DGMGRL> edit database testdb01_halifax set state='apply-off'; Succeeded. DGMGRL>

Having the replication stopped we switched our standby PDB to use the copied files.

RMAN> switch pluggable database TESTDG02 to copy; datafile 26 switched to datafile copy "+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/system.519.1042285693" datafile 27 switched to datafile copy "+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/sysaux.520.1042285701" datafile 28 switched to datafile copy "+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/undotbs1.521.1042285709" datafile 29 switched to datafile copy "+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/undo_2.522.1042285713" datafile 30 switched to datafile copy "+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/users.523.1042285719" RMAN>

And enabled the replication for the PDB:

testdb011> ALTER SESSION SET container=TESTDG02; SESSION altered. testdb011> ALTER pluggable DATABASE enable recovery; Pluggable DATABASE altered. testdb011>

That would not be enough if you had database version 18 and higher. You should change status for the datafile to online. We had version 19.7 and ran a script to online the files.

testdb011> ALTER DATABASE datafile '+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/system.519.1042285693' online; ALTER DATABASE datafile '+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/sysaux.520.1042285701' online; ALTER DATABASE datafile '+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/undotbs1.521.1042285709' online; ALTER DATABASE datafile '+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/undo_2.522.1042285713' online; ALTER DATABASE datafile '+DATAC1/TESTDB01_HALIFAX/A748656F0AC4F483E0530AFE10AC16AA/DATAFILE/users.523.1042285719' online; DATABASE altered.

Everything was ready and the last task was to resume the replication in the Data Guard Broker:

DGMGRL> edit database testdb01_halifax set state='apply-on'; Succeeded. DGMGRL> DGMGRL> disable configuration Disabled. DGMGRL> enable configuration Enabled. DGMGRL>

We could see that the replication was enabled for the PDB:

testdb011> SELECT name,open_mode,recovery_status FROM v$pdbs; NAME OPEN_MODE RECOVERY ------------------------------------------------------------ ---------- -------- PDB$SEED MOUNTED ENABLED TESTPDB1 MOUNTED ENABLED TESTDG01 MOUNTED ENABLED TESTDG02 MOUNTED ENABLED

The rest of the action such as switching the database to read-only mode (if you have Active Data Guard) or other configuration steps are up to your requirements.

As you could see the procedure is not complicated but still have quite a few steps to execute. Some steps could be and should be automated but it would be subject for another topic. Happy migrations and stay tuned.

Terraform is probably already the de-facto standard for cloud deployment. I use it on a daily basis deploying and destroying my tests and demo setups ... Read More

If you’ve been following the recent changes in the Linux world you probably remember how Red Hat and Centos announced in December 2020 that the ... Read More