OCI FortiGate HA Cluster – Reference Architecture: Code Review and Fixes

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Découvrez pourquoi Eclipsys a été nommée 2023 Best Workplaces in Technology, Great Place to Work® Canada et Canada's Top 100 SME !

En savoir plus !

GCP Cloud Shell not only allows to execute and automate tasks around your Cloud resources, but It can help you understand what happens behind the scene when a VM is provisioned for example. That’s also a good way to prepare for the GCP Cloud Engineer Certification. Although it normally takes a subscription to ACloudGuru to follow the course behind this quick lab, they still made the startup script available for free on their GitHub. That’s all we need to demonstrate how to complete the task using google GCloud commands which were not covered in the original course.

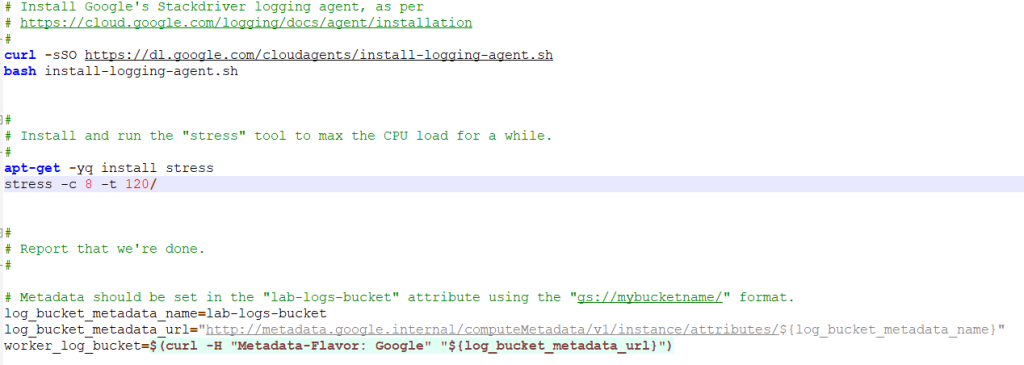

Here’s a direct link to the shell script in their GitHub repo: gcp-cloud-engineer/compute-labs/worker-startup-script.sh

In this exercise, we want to learn what a compute engine requires in order to run a script at launch that will install a logging agent, do a stress test, and write all the syslog events into a Google logging and output feedback into a bucket. You’ll realize that there are underlying service accounts and permission scopes that allow a machine to interact with other cloud services and APIs to get the job done. To sum it up :

I used Cloud Shell to complete this lab. However, you can also run GCloud commands from your workstation via Google SDK.

$ gcloud projects create gcs-gce-project-lab --name="GCS & GCE LAB" --labels=type=lab

$ cloud config set project gcs-gce-project-lab

Link the new project with a billing account

$ gcloud beta billing accounts list

ACCOUNT_ID NAME OPEN MASTER_ACCOUNT_ID

-------------------- ---------------------------- ------ ------------------

0X0X0X-0X0X0X-0X0X0X Brokedba Billing account True

** link the project to a billing account **

$ gcloud alpha billing accounts projects link gcs-gce-project-lab \ --billing-account=0X0X0X-0X0X0X-0X0X0X

** OR **

$ gcloud beta billing projects link gcs-gce-project-lab --billing-account=0X0X0X-0X0X0X-0X0X0X

GCE APIs

$ gcloud services enable compute.googleapis.com $ gcloud services enable computescanning.googleapis.com

Active Config in Cloud Shell

$ gcloud config set compute/region us-east1

$ gcloud config set compute/zone us-east1-b Project level $ gcloud compute project-info add-metadata --metadata google-compute-default-region=us-east1,google-compute-default-zone=us-east1-b --project gcs-gce-project-lab

PROJECT number

$ gcloud projects describe gcs-gce-project-lab | grep projectNumber projectNumber: '521829558627' Derived Service Account name => 221829558627-compute@developer.gserviceaccount.com

PROJECT number

$ wget https://raw.githubusercontent.com/ACloudGuru/gcp-cloud-engineer/master/compute-labs/worker-startup-script.sh

Every compute instance stores its metadata on a metadata server. Your VM automatically has access to the metadata server API without any additional authorization. Metadata is stored as key:value pairs and there are two types; default and custom. In our example, the bucket name `gs://gcs-gce-bucket` is stored in the instance metadata name `lab-logs-bucket` that our script will query during the startup.

logs bucket

$ gsutil mb -l us-east1 -p gcs-gce-project-lab gs://gcs-gce-bucket

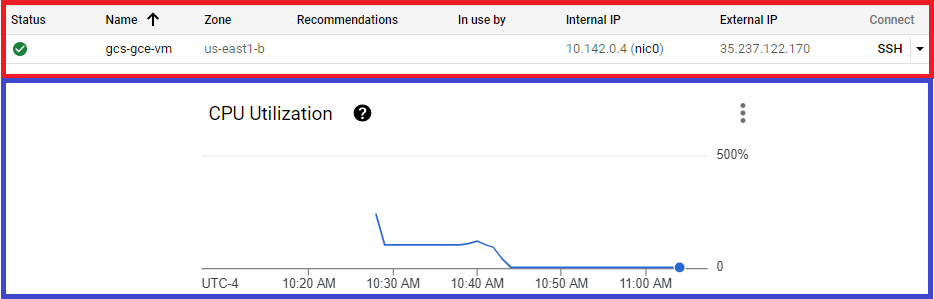

Instance creation$ gcloud compute instances create gcs-gce-vm --metadata lab-logs-bucket=gs://gcs-gce-bucket --metadata-from-file \ startup-script=./worker-startup-script.sh --machine-type=f1-micro --image-family debian-10 --image-project debian-cloud --service-account 521829558627-compute@developer.gserviceaccount.com \ --scopes storage-rw,logging-write,monitoring-write,logging-write,pubsub,service-management,service-control,traceNAME ZONE MACHINE_TYPE INTERNAL_IP EXTERNAL_IP STATUS ---------------------- ------------- ------------- -------------- --------- gcs-gce-vm us-east1-b f1-micro 10.100.0.1 34.72.95.120 RUNNING

Notice the write privilege into GCS (storage-rw) that will allow our VM to write the logs into the logs bucket.

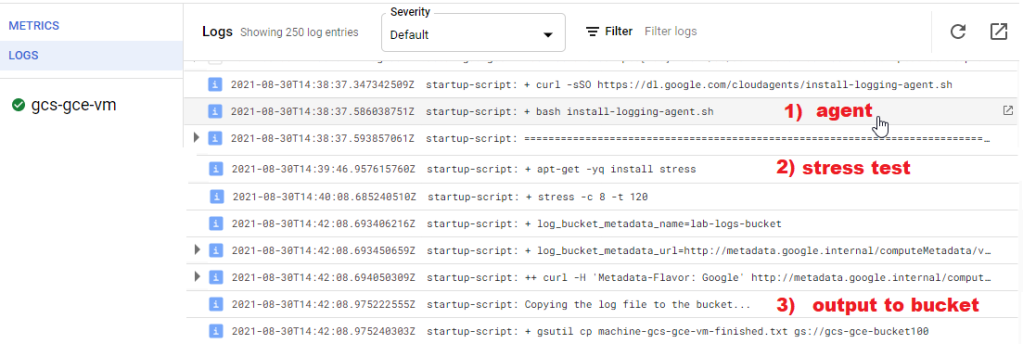

System logs available in Stackdriver Logs

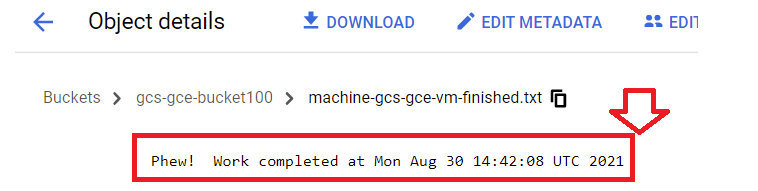

GCS bucket created and logFile appears in the bucket after instance finishes starting up

No SSH access is needed to the instance

Thanks for reading!

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Introduction So far, I have used Oracle AutoUpgrade, many times in 3 different OS’. Yet the more you think you’ve seen it all and reached the ... Read More