OCI FortiGate HA Cluster – Reference Architecture: Code Review and Fixes

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Découvrez pourquoi Eclipsys a été nommée 2023 Best Workplaces in Technology, Great Place to Work® Canada et Canada's Top 100 SME !

En savoir plus !I want to thank Ludovico Caldara [FPP & Cloud MAA Product Manager @Oracle] for accepting the publication of this interview which is based on a conversation we had some time ago. It is mainly focused on the Oracle Fleet Patching and Provisioning (FPP) “FUNDAMENTALS”, but I hope this could help the community to glean a better understanding as to which is which and which does what within its Architecture before trying their labs.

Note: If you want to check the hottest news about FPP, please jump to the 4th section “What’s cooking for FPP”

Main Topics

1. Storage options for provisioned Software

2. Client/Server relationship

3. Upgrade in FPP

4. What’s cooking for FPP in 2021

○ Helpful resources

— ⚜ “Latin Greetings” ⚜ —

[BrokeDBA]

Ciao Ludovico, come stai! Grazie per aver accettato questa intervista.

[Ludovico]

Ciao BrokeDBA, tutto bene grazie. Grazie a te per l’invito!

…

[BrokeDBA]

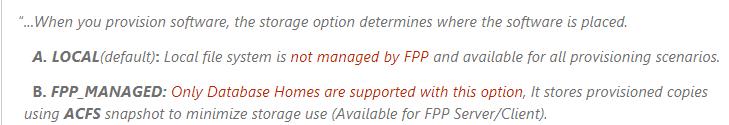

First, I recently read a section in the FPP technical brief where they stated something like the below

My question is what does “FPP managed” storage change really for provisioning?

Does that mean if the storage is FPP managed, it can’t store and provision grid images?

[Ludovico]

The option to provision software “LOCAL” or “FPP_MANAGED” is related to the possibility of using ACFS on the client to store a copy of the gold image locally to the client and add the working copy as an ACFS snapshot.

So from there, any working copy that you want to provision out of the same gold image (or image series), will be provisioned as an ACFS (Automatic Storage Management Cluster File System) snapshot of the corresponding image (see link)

The dependency with ACFS makes it impossible to have working copies of Grid Infrastructure using RHP/FPP_MANAGED.

The image management on the FPP server does not change, you can import and manage GI images or DB images on the FPP server, they’ll always go in the ACFS filesystem.

[BrokeDBA]

So it’s not a provisioning limitation for simple target servers but only for FPP clients? We could still provision working copies of GI gold images stored in the FPP server, just not on an FPP_MANAGED storage if the destination is an FPP server or client correct?

[Ludovico] Correct.

[BrokeDBA]

Does it mean I can only add DB home working copies to an FPP_MANGED storage in the FPP servers’ ACFS system?

[Ludovico]

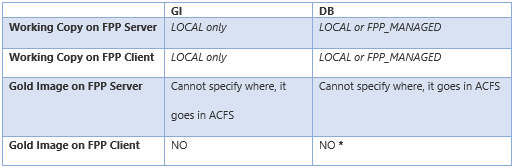

I was referring to images, not working copies. I’ll try to schematize it here:

(*) when provisioning DB Working Copies on FPP_MANAGED, the base ACFS file system contains a copy of the image, but you cannot “add image” to a client

[BrokeDBA]

So all GI working copies need to be LOCAL, cue the mandatory -path option?

[Ludovico] Correct.

[BrokeDBA]

Now back to FPP clients, what kind of relationship is there between an FPP client & the FPP server in terms of role and content?

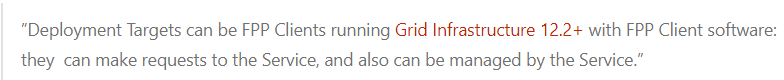

From the documentation, I could read the following

A bit further

Is HA the reason behind Client/Server architecture or could you clarify this relationship a bit more?

[Ludovico]

These two statements are a bit unrelated. The first says that to promote a cluster as an FPP (Fleet Patching and Provisioning) client (and not just target), you need at least GI 12.2 if the server is 19c. If you have GI 12.1 on the client, it cannot become a client but will stay an unmanaged target. The difference is that the client is “registered” and further operations on it do not require a root password anymore.

The client/server relationship is established once and for all, with credential wallets, when doing “add client/ add rhpclient”). Also, once a cluster becomes an FPP client, it can trigger actions on its own (if the local user has the correct roles).

The second statement just suggests that the FPP server should be highly available so that the FPP server stays available in case of server failure. As it says, not mandatory but recommended.

[BrokeDBA]

Does the client store images like any FPP server, or just uses the FPP server’s repository for creating working copies?

[Ludovico]

Second, Clients do not store their own images, they always get them from the server.

[BrokeDBA]

What does FPP_MANAGED storage option mean in practice for FPP client environment provisioning?

Option 1

Add a DB working copy snapshot after importing the image from the FPP server to a local ACFS, (total size = image + snapshot)?

Option 2

Add a DB working copy as a snapshot directly from the FPP server’s ACFS image without having to import it first, (total size = snapshot)?

[Ludovico]

Option 1: Exactly, the import of the image and the creation of the snapshot are implicit with the ”add workingcopy” command.

Option 2: No, this was possible in 12.1 (NFS working copy provisioning), but it has been dismissed because NFS availability was a concern more than a solution.

[BrokeDBA]

Let’s say we have an existing 12c non-CDB (non-working Copy) installed and wish to migrate it to 19c CDB.

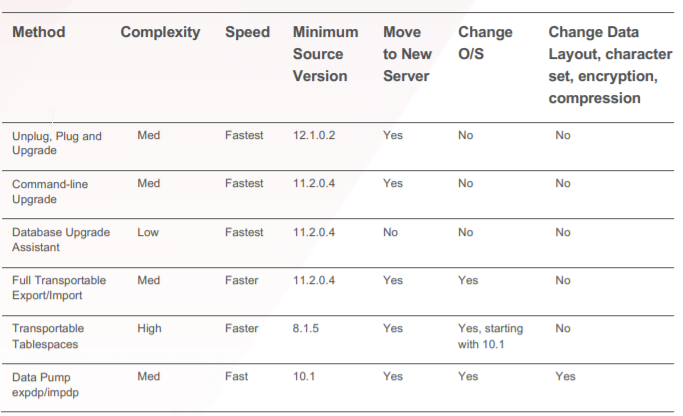

In a normal world (non-FPP) we usually have the below options (see 19c migration white paper)

Which scenarios are available in an FPP environment (i.e.: FPP Server + FPP Target having 12c NonCDB DB) that allow us to do the same migration in question (Non-CDB 12c to 19c CDB)?

Early documentation wasn’t very clear for me on that scenario, is multi-tenant conversion supported too?

[Ludovico]

You can use Fleet Patching and Provisioning to upgrade CDBs but 19c Fleet Patching and Provisioning does not support converting a non-CDB to a CDB during the upgrade.

Up to 19c, FPP uses DBUA and DBCA in the backend for database upgrade and creation. If you need to have special templates for DBCA you can create the templates in the Oracle Home and create the gold image from that. From that moment, the working copies provisioned from that image will have the template that you need.

[BrokeDBA]

I realized that a local database on an FPP target doesn’t have to be a working copy to be upgraded with FPP but we can have a new copy created on the fly during the upgrade using the “-image” option.

Could you tell me more about this feature?

Syntax: rhpctl upgrade database … [-image 19c_image_name [-path where_path]]

[Ludovico]

Correct, that’s also in the 19c doc.

“ …. If the destination working copy does not exist, then specify the gold image from which to create it, and optionally, the path to where to provision the working copy.”

[BrokeDBA]

I have an idea about what’s hot lately for FPP but could you elaborate more about the exciting news that is in store for FPP in 2021 and beyond?

[Ludovico]

Fleet Patching and Provisioning 21c come with support for the Oracle Autoupgrade tool. This simplifies the preparation, execution and troubleshooting of upgrade campaigns. The feature will be hopefully backported to 19c.

As more and more customers migrate their fleet to Exadata Cloud Services and Exadata Cloud at Customer, the next big development will be toward integrating fleet patching capabilities in the OCI service portfolio. Today, patching cloud services with the on-premises version of FPP is not supported.

For the long-term vision, FPP will be the core of all database fleet patching operations within Oracle. It will be critical to make it easier to implement and maintain, so expect improvements in this direction for future releases. Sorry, I cannot tell more 🙂

Brokedba vagrant fork (—brokedba’s blog , @solifugo’s blog)

Terraform OCI Stack for testing Oracle Fleet Patching and Provisioning 19c (from Ludovico)

Introduction OCI Quick Start repositories on GitHub are collections of Terraform scripts and configurations provided by Oracle. These repositories ... Read More

Introduction So far, I have used Oracle AutoUpgrade, many times in 3 different OS’. Yet the more you think you’ve seen it all and reached the ... Read More